Build Jekyll on Local Machine

06 Feb 2017Since I started running a github page via Jekyll, I have been wanting to build it on local machine before I push changes to repo.

Since I started running a github page via Jekyll, I have been wanting to build it on local machine before I push changes to repo.

Basic spider has been discussed in previous post, which allows us to simply scrape information on pages specified on start_urls. However, there might be a case where internal links should be followed and certain urls should be filtered.

This can be achieved with the help of CrawlSpider. Let’s take a look at steps to develop it.

scrapy startproject <project_name> that generates the following structurename is wikipediaallowed_domains is wikipedia.orgstart_urls is wikipedia page to start - https://en.wikipedia.org/wiki/Mathmeticsallow that urls must match to be extracted (regular expression)restrict_xpaths is an xpath which defines regions inside the response where links should be extracted fromAs I am targeting all links within main body of wiki page, the rule can be as below

Rule(LinkExtractor(allow="https://en.wikipedia.org/wiki/", restrict_xpaths="//div[@class='mw-body']//a"), callback='parse_page', follow=False)

from scrapy.contrib.spiders import CrawlSpider, Rule

from scrapy.contrib.linkextractors import LinkExtractor

class WikiSpider(CrawlSpider):

name = 'wikipedia'

allowed_domains = ['wikipedia.org']

start_urls = ["https://en.wikipedia.org/wiki/Mathmetics",]

rules = (

Rule(LinkExtractor(allow="https://en.wikipedia.org/wiki/", restrict_xpaths="//div[@class='mw-body']//a"), callback='parse_page', follow=False),

)

def parse_page(self, response):

item = WikiItem()

item["name"] = response.xpath('//h1[@class="firstHeading"]/text()').extract()

return item

scrapy crawl wikipedia (spider name) -o out.json, Scrapy will make a call to the url and the response will be parsed[

{"name": ["Wikipedia:Protection policy"]},

{"name": ["Giuseppe Peano"]},

{"name": ["Euclid's "]},

{"name": ["Greek mathematics"]}

...

]

See source code

This post will explain how to create new scrapy project using command prompt and text editor such as notepad++. Note that it assumes you have already installed scrapy package on your machine, and I am using Scrapy version 1.1.2. See steps below.

scrapy startproject <project_name> that generates the following structurefrom scrapy.item import Item, Field

class JobItem(Item):

title = Field()

link = Field()

from scrapy import Spider

from scrapy.selector import Selector

from quick_scrapy.items import JobItem

class JobSpider(Spider):

name = 'jobs'

allow_domains = ['seek.com.au']

start_urls = [

'https://www.seek.com.au/jobs?keywords=software+engineer'

]

def parse(self, reponse):

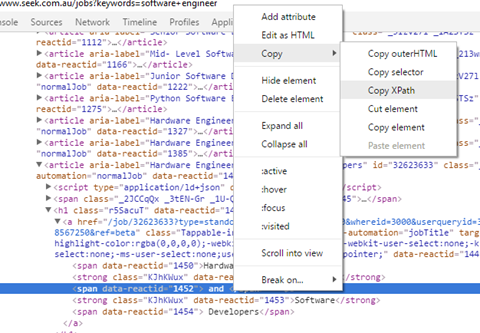

titles = reponse.xpath('//article')

items = []

for each in titles:

item = JobItem()

item["title"] = each.xpath('@aria-label').extract()

item["link"] = each.xpath('h1/a/@href').extract()

print item['title'], item['link']

return items

scrapy crawl jobs (spider name) -o out.json, Scrapy will make a call to the url and the response will be parsed[

{

"link": ["/job/32678977?type=promoted&tier=no_tier&pos=1&whereid=3000&userqueryid=36d7d8b41696017af4c442da6bbf62e8-2435637&ref=beta"],

"title": ["Senior Software Engineer as Tester"]

},

{

"link": ["/job/32635906?type=promoted&tier=no_tier&pos=2&whereid=3000&userqueryid=36d7d8b41696017af4c442da6bbf62e8-2435637&ref=beta"],

"title": ["Software Designer"]

},

{

"link": ["/job/32691890?type=standard&tier=no_tier&pos=1&whereid=3000&userqueryid=36d7d8b41696017af4c442da6bbf62e8-2435637&ref=beta"],

"title": ["Mid-Level Software Developer (.Net)"]

},

...]

See source code